MARKET-DRIVEN CURRICULUM DESIGNED BY EXPERTS

Mentor Support

Get weekly one-on-one mentorship, delivering personalized guidance to navigate every challenge.

Affordable Tuition

Earn a high-quality education that doesn’t come with a hefty price tag.

High-Demand Skills

Gain hands-on experience and high-demand skills, so you stand out as a top job candidate.

Job Ready Portfolio

In under six months, graduate with a portfolio showcasing your expertise and preparing you for employment.

26-Week Course

WHAT YOU’LL LEARN IN THIS BOOTCAMP

Guided by industry-leading instructors and mentors, you’ll learn how to process vast data sets efficiently and extract valuable insights. You’ll gain hands-on experience working with cutting-edge data technologies, making you job-ready and skilled at driving innovation.

Data Engineering Prerequisites:

- Technical aptitude

- Logical thinker

- Comfortable with OS file system concepts

- Experienced downloading and installing software

- Experience working with data connections in Excel or other applications

- Experience creating macros and functions in Excel or other applications

- Some exposure to programming is helpful but not required

SQL and Python Fundamentals

Weeks 1-4

Python

Python is a programming language, ideal for data processing, automation, and analysis. Its intuitive syntax and vast libraries make it essential for data engineers to manage and interpret datasets effectively.

Error Handling

Error handling ensures that software operates smoothly even when unexpected issues arise. It’s crucial to handle discrepancies in data or processing errors to maintain data integrity and system reliability.

Data Structure

Data structures are foundational ways of organizing data for efficient access and modification. For data engineers, understanding the right data structure is key to optimizing performance and creating scalability in data processing tasks.

Logic

Logic forms the basis of every algorithm, dictating how software makes decisions and processes data. Mastering logical constructs allows data engineers to design efficient data pipelines and troubleshoot potential issues.

Introduction to Cloud Technologies and AWS

Weeks 5-9

AWS

Amazon Web Services (AWS) is a cloud services platform, providing an array of tools and infrastructure for data storage, processing, and analysis. Mastering AWS means harnessing the power of the cloud to scale and optimize data pipelines.

SageMaker

SageMaker is a service dedicated to machine learning, enabling users to build, train, and deploy models at scale. Data engineers utilizing SageMaker can integrate sophisticated machine learning capabilities into their data pipelines.

Athena

Athena allows users to analyze data directly in Amazon S3 using standard SQL. For data engineers, Athena offers a swift and cost-effective way to run ad-hoc queries without the need for complex infrastructure, making data analysis more accessible.

RDS

Amazon Relational Database Service (RDS) is a cloud-based database service that simplifies the process of setting up, operating, and scaling databases. RDS provides a reliable and scalable solution for storing structured data.

AWS Glue

AWS Glue makes it easy to prepare and move data between diverse data sources. For data engineers, AWS Glue automates the time-consuming tasks of data discovery, conversion, and job scheduling.

EMR Clusters

Amazon Elastic MapReduce (EMR) provides a managed cluster platform that simplifies running big data frameworks. EMR Clusters enable the processing of large amounts of data in parallel, facilitating faster insights and allowing for scalable data analytics solutions.

Introduction to Hadoop and Distributed Computing

Weeks 10-12

Hadoop

Hadoop is a distributed framework designed to store and process vast amounts of data across clusters of computers. Leveraging Hadoop leads to scalable and fault-tolerant data management, making it a cornerstone in big data analytics.

Hive

Hive is a data warehousing infrastructure built on top of Hadoop, facilitating data summarization, querying, and analysis. With Hive, complex big data tasks become accessible, enabling data engineers to manage large datasets without deep programming expertise.

Hue

Hue is an open-source user interface that simplifies the use of Hadoop and its related tools. It provides a web-based platform where users can interact with big data applications, streamlining tasks like data exploration, workflow management, and job monitoring.

Spark

Spark is an open-source, distributed computing system that excels in real-time data processing and analytics. Its in-memory capabilities allow for rapid data operations, making it a top choice for tasks ranging from machine learning to stream processing.

Big Data Ingestion in AWS

Learn how to gather and bring vast amounts of data into AWS, preparing it for further analysis and use.

Weeks 13-15

Amazon Aurora

Amazon Aurora is a relational database service that combines the speed and availability of high-end commercial databases with the simplicity and cost-effectiveness of open-source databases. Aurora automatically scales, replicates, and patches, ensuring optimal performance.

Amazon Kinesis

Amazon Kinesis offers powerful solutions for real-time data streaming, ingestion, and processing. Whether ingesting application logs, analyzing real-time metrics, or responding to live events, Kinesis allows engineers to harness the potential of real-time data.

Ingestion in AWS

Ingestion in AWS refers to the process of importing, transferring, and loading data into the AWS ecosystem. For data engineers, understanding the various tools and methods for data ingestion in AWS ensures efficient and timely data availability.

Building a Data Lake in AWS

Understand the concept of a Data Lake, a vast pool of raw data stored in AWS, where it’s kept ready for analysis without a fixed format or purpose.

Weeks 16-17

Storage Concepts

Storage concepts encompass the various methods and best practices used to save, retrieve, and manage data. Grasping these concepts is essential, as it determines how data is accessed, its speed of retrieval, and the cost implications of storage solutions.

Amazon Redshift

Amazon Redshift is a fully managed data warehouse service in the cloud, optimized for online analytic processing (OLAP). It offers fast query performance making it a go-to solution for analyzing large datasets.

Data Lake

A Data Lake is a centralized repository that allows you to store data at any scale. With its flexibility, data lakes enable comprehensive data analysis and exploration, accommodating diverse data sources and formats.

Relational Data Modeling

Master the art of structuring your data in tables and defining relationships between them, making it easier to store, retrieve, and make sense of data.

Weeks 18

Normalization

Normalization is the process of organizing data in a database to reduce redundancy. By structuring data in this way, databases become more efficient, making data retrieval and updates more streamlined.

OLTP vs OLAP

OLTP (Online Transaction Processing) systems prioritize fast and reliable transactional tasks, while OLAP (Online Analytical Processing) focuses on complex queries and analytics over large volumes of data. They serve different database functions and cater to specific business needs.

Data Modeling

Data modeling is the practice of designing and conceptualizing data structures and relationships. It provides a blueprint for how data is sourced, integrated, maintained, and consumed, forming the backbone of analytics projects.

Constructing a Data Science Platform

Learn to set up an integrated platform where data scientists can experiment, analyze, and gain insights from the available data.

Weeks 19-22

Notebooks

Notebooks, like Jupyter and Zeppelin, are interactive tools that allow for live code execution, visualization, and data analysis in a unified document. They’re essential in exploratory data analysis, machine learning, and sharing insights in an interactive manner.

AWS SageMaker

AWS SageMaker is a cloud service designed to empower users to build, train, and deploy machine learning models with ease. With its integrated tools and scalable environment, SageMaker simplifies the entire machine-learning workflow.

Elastic MapReduce

Elastic MapReduce (EMR) is a cloud framework that facilitates the processing of data. Leveraging EMR aids in efficiently running big data frameworks like Apache Hadoop and Apache Spark, without the operational overhead.

Jupyter

Jupyter is an open-source platform that supports interactive data science and scientific computing across numerous programming languages. With its intuitive notebook interface, users can write and execute code, visualize data, and create shareable documents.

Visualization

Visualization involves transforming raw data into graphical representations like charts and graphs. By converting complex data sets into visually intuitive formats, data engineers can create clearer insights and more compelling stories.

READY TO CHANGE YOUR LIFE? APPLY FOR THE NEXT BOOTCAMP

28

MAY

Data Engineering

Classes Begin

Bootcamp

27

JUN

Data Engineering

Classes Begin

Bootcamp

31

JUL

Data Engineering

Classes Begin

Bootcamp

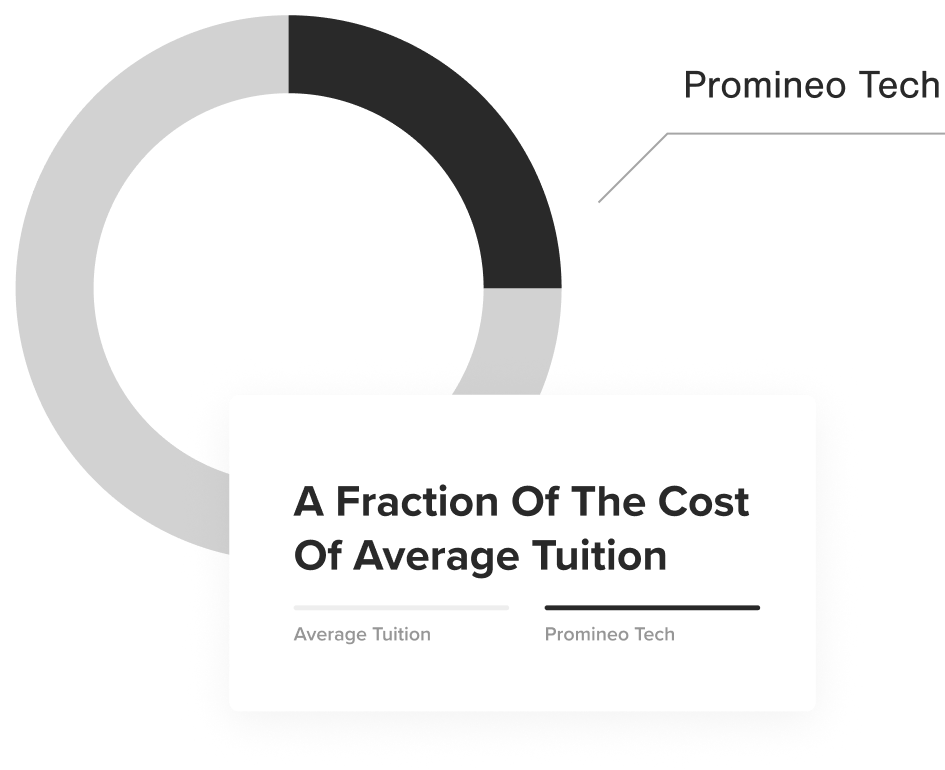

A MORE AFFORDABLE, ACCESSIBLE EDUCATION

The average coding bootcamp costs over $13,500. With our flipped-teaching model and stellar partners, we provide top-tier programs for much less, lowering the financial barriers to life-changing education.

Frequently Asked Questions

Is the course instructor-led or self-led?

The course is instructor-led and live online.

How long is the course?

The course itself is 19 weeks long, which equates to just over four and a half months. You’ll meet for class once a week for 18 weeks and spend the final week of the program working on your final project in lieu of class

Are class sessions recorded?

Every class is recorded and available to students to review throughout the course. If a scheduling conflict arises, attendance requirements can be met by watching the video on occasion. This should not happen frequently, but life happens!

What is the background and experience of your instructors?

Most of our instructors work in the industry by day in addition to teaching. Our instructors have worked at Keap/Infusionsoft, USAA, Walmart, University of Arizona, Choice Hotels, American Express, Hightouch, US Army, FactSet, University of Wisconsin – Stevens Point, Mayo Clinic, and Whoop, among other national and multinational companies.

What is the structure of the program?

The structure of the program is a flipped classroom model. This entails that students review the curriculum before lectures and come to class already familiar with the relevant concepts. The bootcamp is part-time and designed for full-time working adults. However, students who are absolute beginners may need to invest extra time into learning the more advanced modules.

What technologies are covered?

There are many technologies covered in this program including JavaScript, Algorithms, OOP, Design Patterns, Unit Testing, HTML, CSS, JQuery, Bootstrap, AJAX, React, JSX, REST, NPM, AWS, and more.

What types of jobs will grads be prepared for?

Successful completion of the program will help you prepare for types of roles such as front-end developer, web developer, UI developer, JavaScript developer, web designer, UX/UI designer, front-end web engineer, mobile app developer (using web technologies), CMS developer/theme developer, SVG animator, performance/optimization specialist, accessibility specialist.

It Is Estimated There Will Be A Shortage Of 1.4 Million Software Developers In The US Within The Next Year. Start Your Career Today.

Where Our Alumni Work

Ready to Enroll

Take our short, 12-question assessment

Software Engineer Pre-Assessment

"*" indicates required fields

Call to speak to a Program Specialist (480) 774-7842

Call to speak to a Program Specialist (480) 774-7842